What’s a token

A token is a group of characters, typically a common sequence of characters, which may or may not be part of a larger sequence. The definition of a token can vary because each model has its own unique tokenisation process.

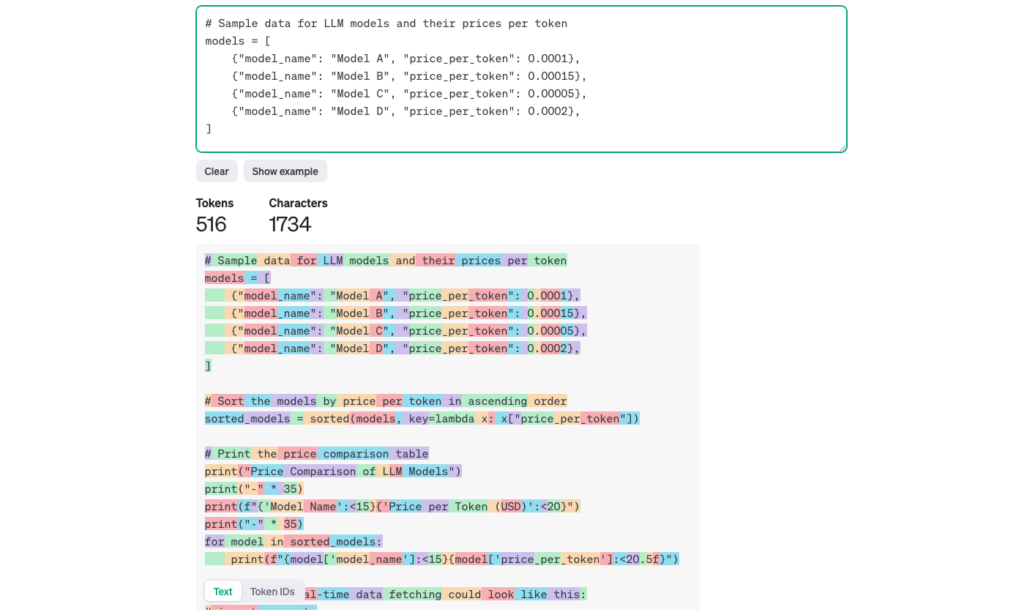

Generally, a token can be as large as an entire English word. However, longer or less common words might be broken down into multiple tokens. On average, one token is about 4 characters, and roughly 100 tokens correspond to about 75 words.

Understanding tokens helps you better interact with LLMs, manage costs, and make the most efficient use of the model’s capabilities.